Software and control architecture

The robot development environment (RDE) ArmarX aims at providing an infrastructure for developing a customized robot framework that enables the realization of distributed robot software components. To this end, ArmarX is organized in three layers: the middleware layer which provides core features such as communication methods and deployment concepts, the robot framework layer which consists of standardized components that can be tailored to customize the robot software framework and the application layer where robot programs are implemented. This layered architecture eases the development of robot software architectures by making use of standard interfaces and ready-to-use components which can be customized, extended and/or parametrized according to the robot’s capabilities and application. To support the development process ArmarX provides a set of tools such as plug-in-based graphical user interfaces, a statechart editor, and tools for online inspection and state disclosure.

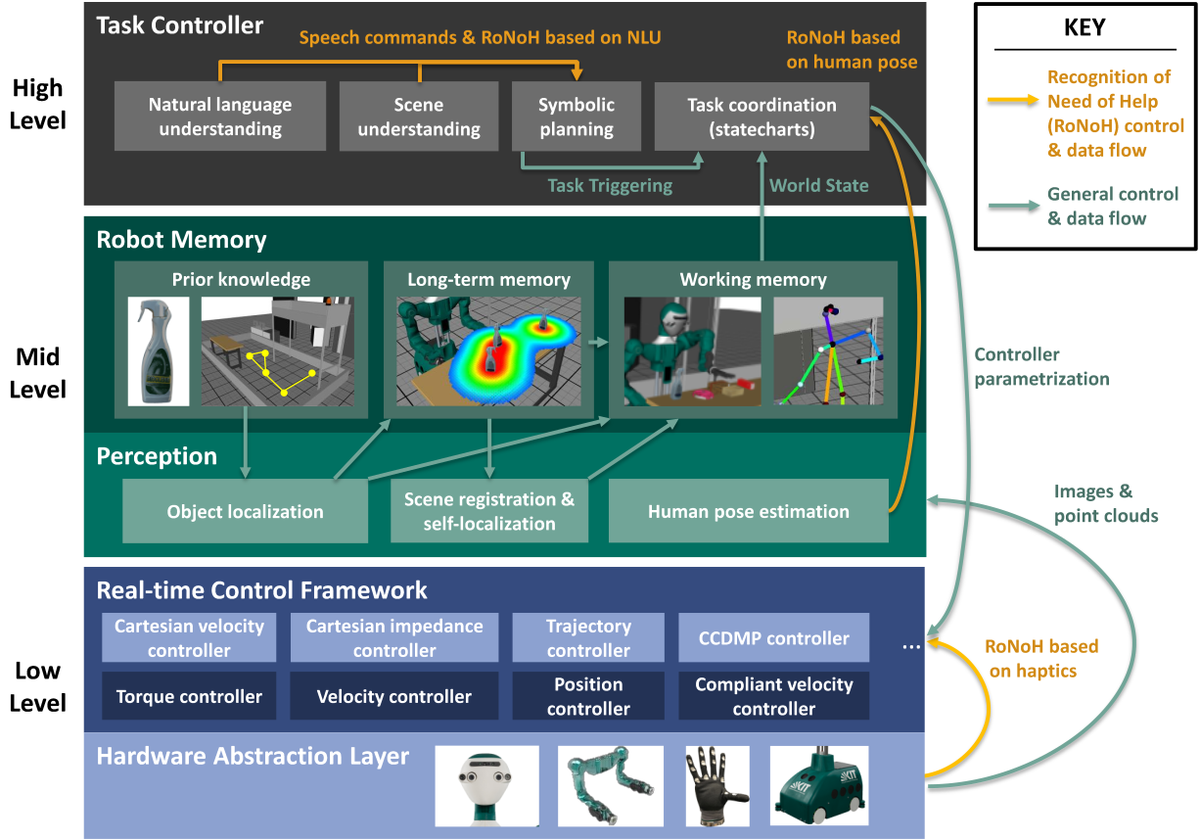

Software and control architecture of the humanoid robot ARMAR-6

In order to be able to adequately control the high-performance actuators of ARMAR-6, we developed a robot-independent, real-time capable control framework ArmarXRT. The developed concepts allow for combining and switching between different control approaches with varying cycle times and real-time requirements while maintaining full compatibility with our existing robot-framework ArmarX. We abstract the robot in a set of virtual control and sensor devices. These control devices represent actuators or the power management system, receive set-points from higher level components, convert them into the hardware specific format and send them to the real hardware. Sensor devices receive real data (e. g. motor ticks) or virtual data (e. g. control frequency) and make it available to higher level components. In order to develop flexible and reusable control mechanisms, the framework offers a two-level hierarchy for controllers:

- Hardware-specific joint-controllers consider low-level control tasks such as encoding radian positions into encoder ticks or calculate the motor current based on a torque target.

- Hardware-independent multi-joint-controllers for mid- or high-level tasks such as joint trajectory execution or Cartesian velocity control.

Using these mechanisms we are able to offer the same software interfaces on the real robot and in simulation which enables faster development since multi-joint-controllers run in simulation and on the real robot. The control framework is divided into a real-time capable part and a non-real-time part. The non-real-time part provides communication interfaces to non-real-time software-modules and provides the possibility to use existing components, including the platform control, motion planning, our memory architecture and visualization of the internal state. It also handles all management functionalities such as controller creation and destruction. The real-time capable control thread handles bus communication, controller execution as well as the non-blocking interfacing with higher-level components via the TCP or UDP based communication protocols using ArmarX/Ice.

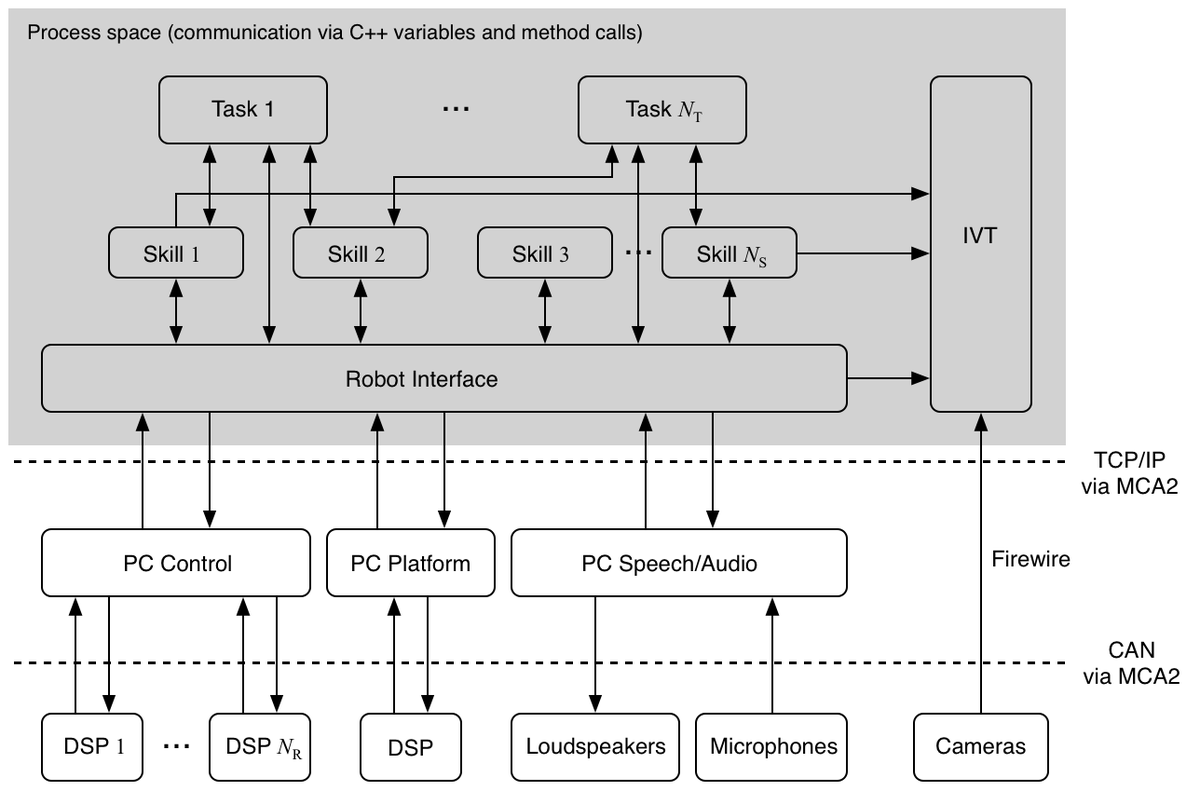

Software and control architecture of the humanoid robot ARMAR-III

The software and control architecture of the humanoid robot ARMAR-III consists of three layers (see figure). On the lowest level, DSP perform low-level sensorimotor control realized as cascaded velocity-position control loops. On the same level, hardware such as microphones, loudspeakers, and cameras are available. All these elements are connected to the PCs in the mid-level, either directly or via CAN bus. The software in the mid-level is realized using the Modular Controller Architecture (MCA2). The PCs in the mid-level are responsible for higher-level control (forward and inverse kinematics), the holonomic platform, and speech processing.

The first two levels can be regarded as stable i.e. the implemented modules remain unchanged. The programming of the robot takes place on the highest level only. Here, the so-called robot interface allows for convenient access to the robot's sensors and actuators via C++ variables and method calls.

To allow for effective and efficient programming of the robot, in addition to direct access to the robot's sensors and actors, two abstraction levels are defined: tasks and skills. Skills implement atomic capabilities such as platform navigation, visual object search, grasping objects, placing objects, handing over objects, opening doors, closing doors, etc. Tasks are operate on a higher level and are composed of several skills, e.g. bringing juice from the fridge.